William Pinstrup Huey

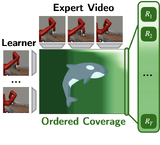

Hi, I’m Will. I’m a Visiting Researcher at the University of Washington, where I’m working on dexterous manipulation with Prof. Abhishek Gupta. Previously, I worked with Prof. Sanjiban Choudhury on learning robot control from videos.

I recently graduated Summa Cum Laude from Cornell with a B.S. in Computer Science (‘25). I maintained a 4.1 GPA, I was a teaching assistant for Visual Imaging in the Electronic Age, and I was a Rawlings Presidential Research Scholar. I spent my summers interning at NASA and Amazon Web Services.

I also enjoy climbing and trail running.

News

| Jul 01, 2025 | I started as a Visiting Researcher with Prof. Abhishek Gupta at the University of Washington |

|---|---|

| May 22, 2025 | I graduated Summa Cum Laude from Cornell with a B.S. in Computer Science |

| May 01, 2025 | Imitation Learning from a Single Temporally Misaligned Video was accepted to ICML 2025 |

| Mar 04, 2025 | I won $500 and first place at the RCPRS Grand Slam for my presentation on learning from videos |

| Feb 24, 2025 | I was a panelist at ACSU research night, where I had the opportunity to share my awesome experience doing research at Cornell with other undergraduates |